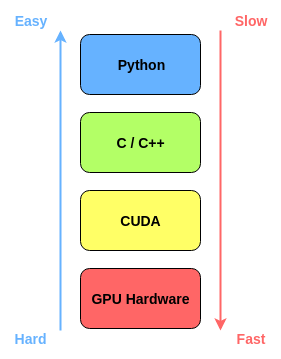

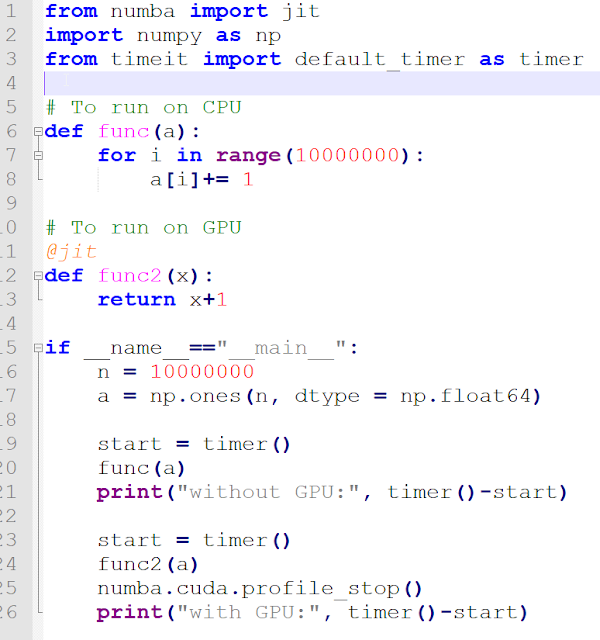

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

machine learning - How to make custom code in python utilize GPU while using Pytorch tensors and matrice functions - Stack Overflow

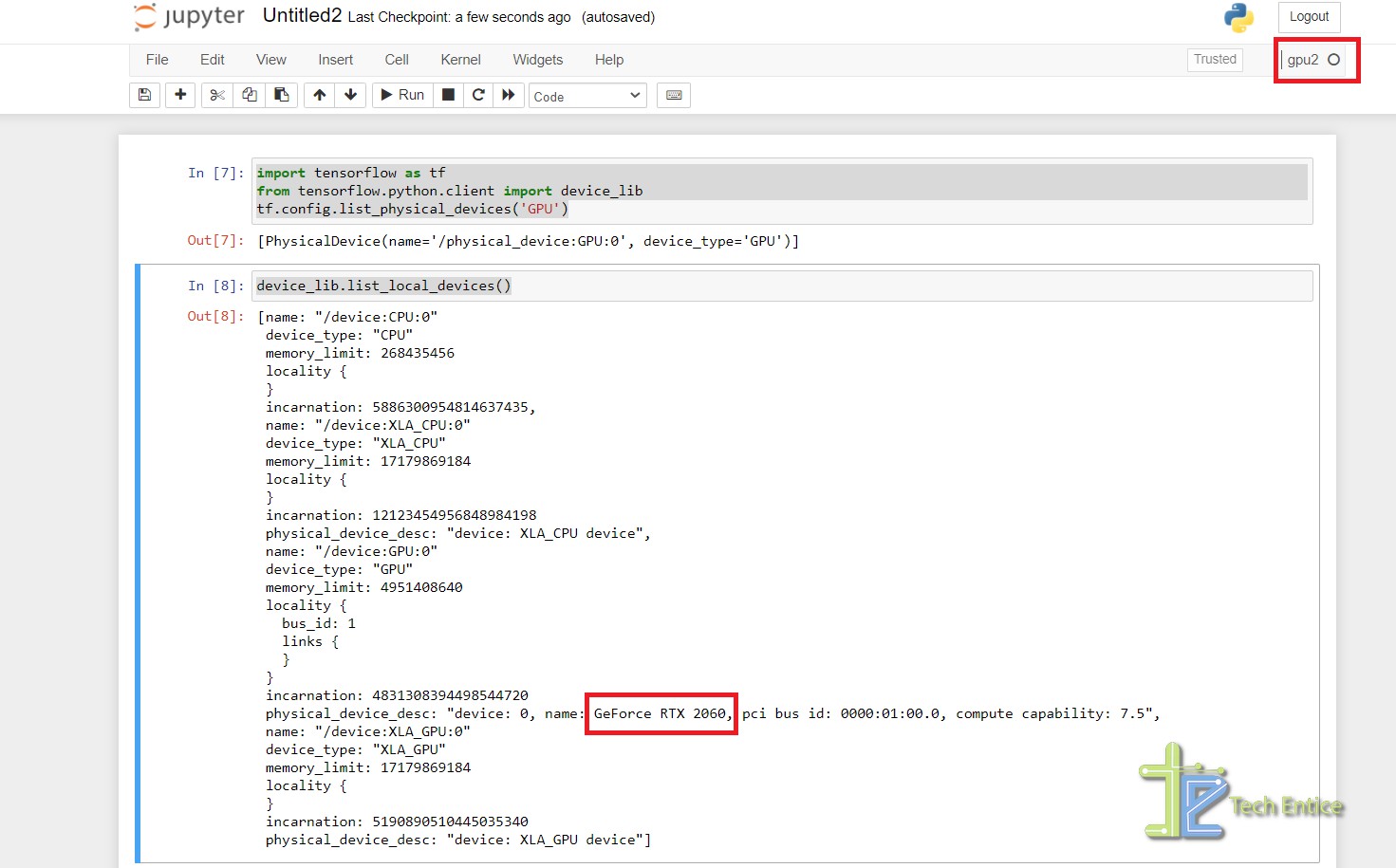

Why is the Python code not implementing on GPU? Tensorflow-gpu, CUDA, CUDANN installed - Stack Overflow

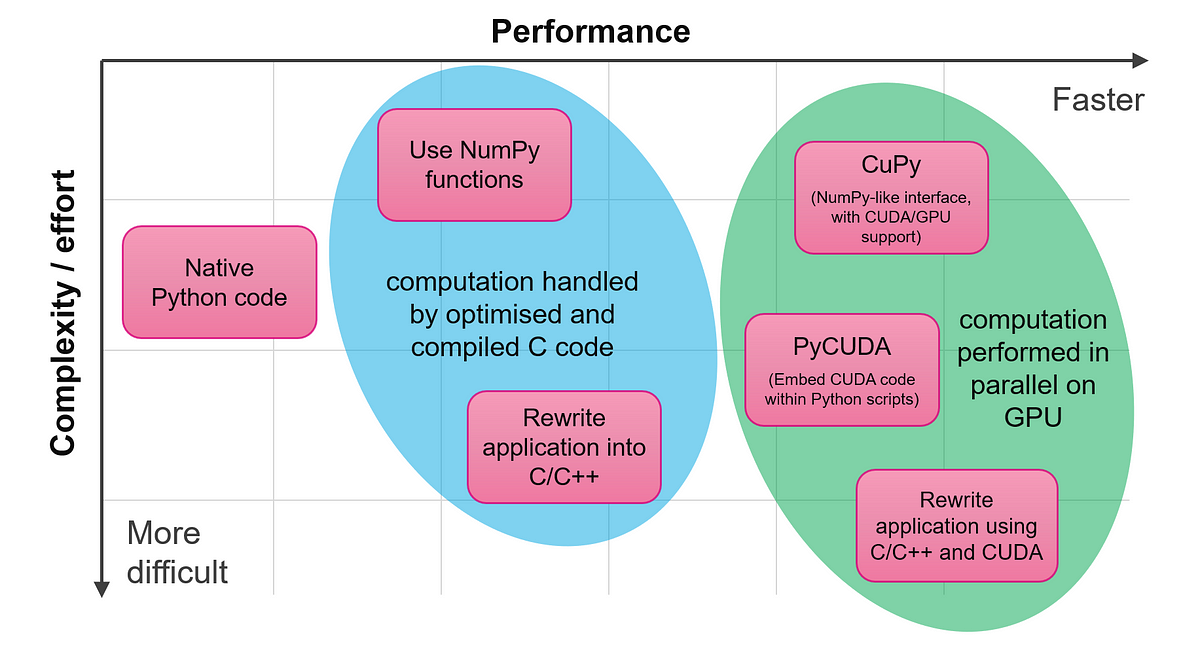

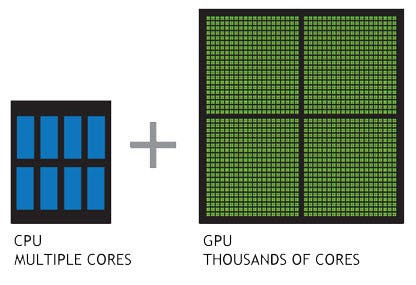

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

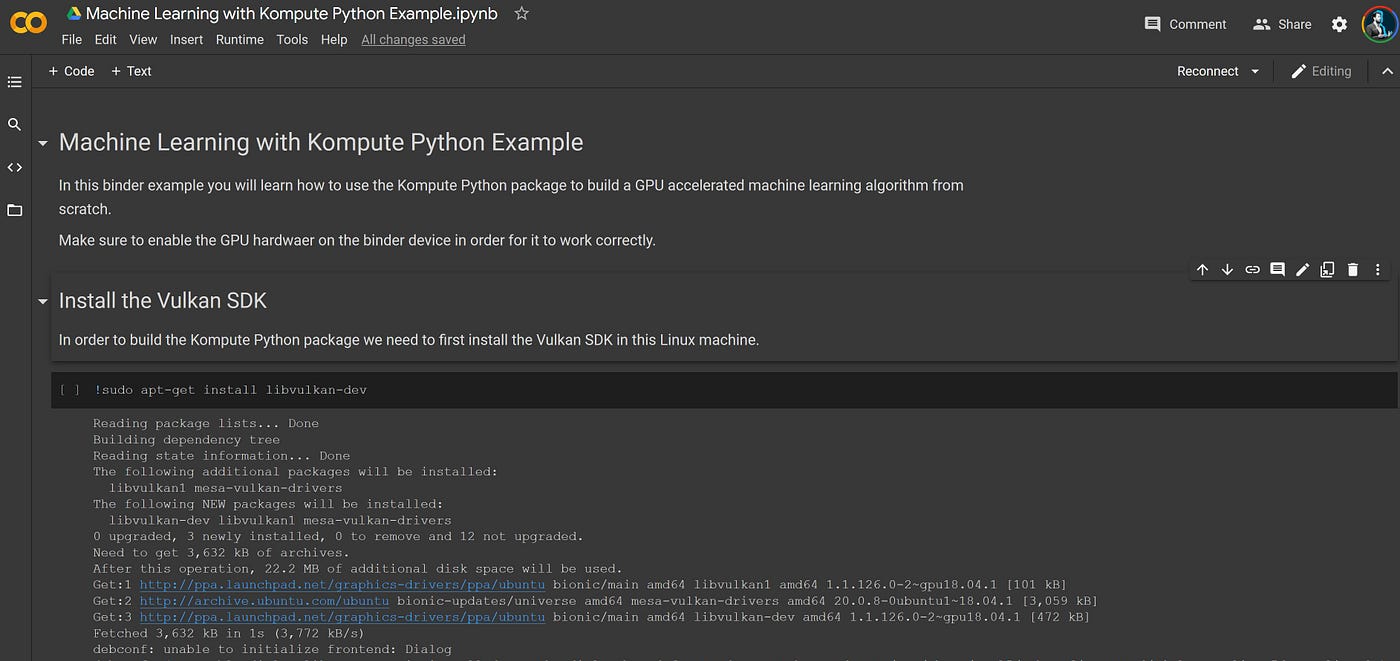

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science