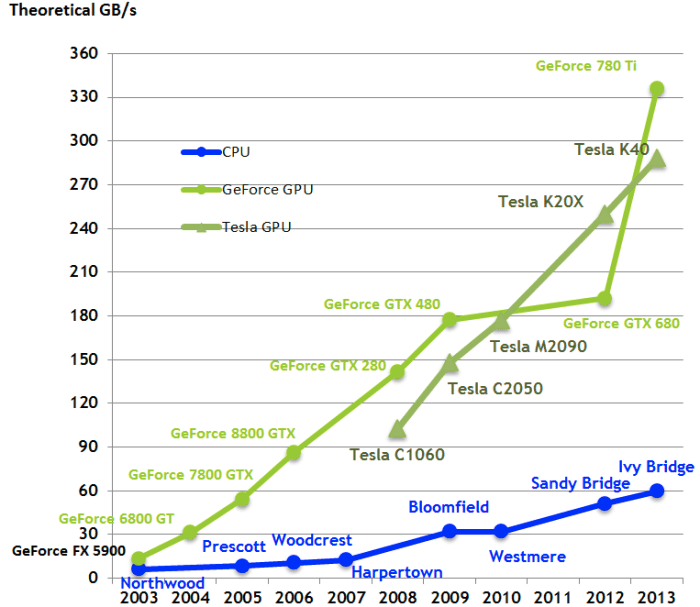

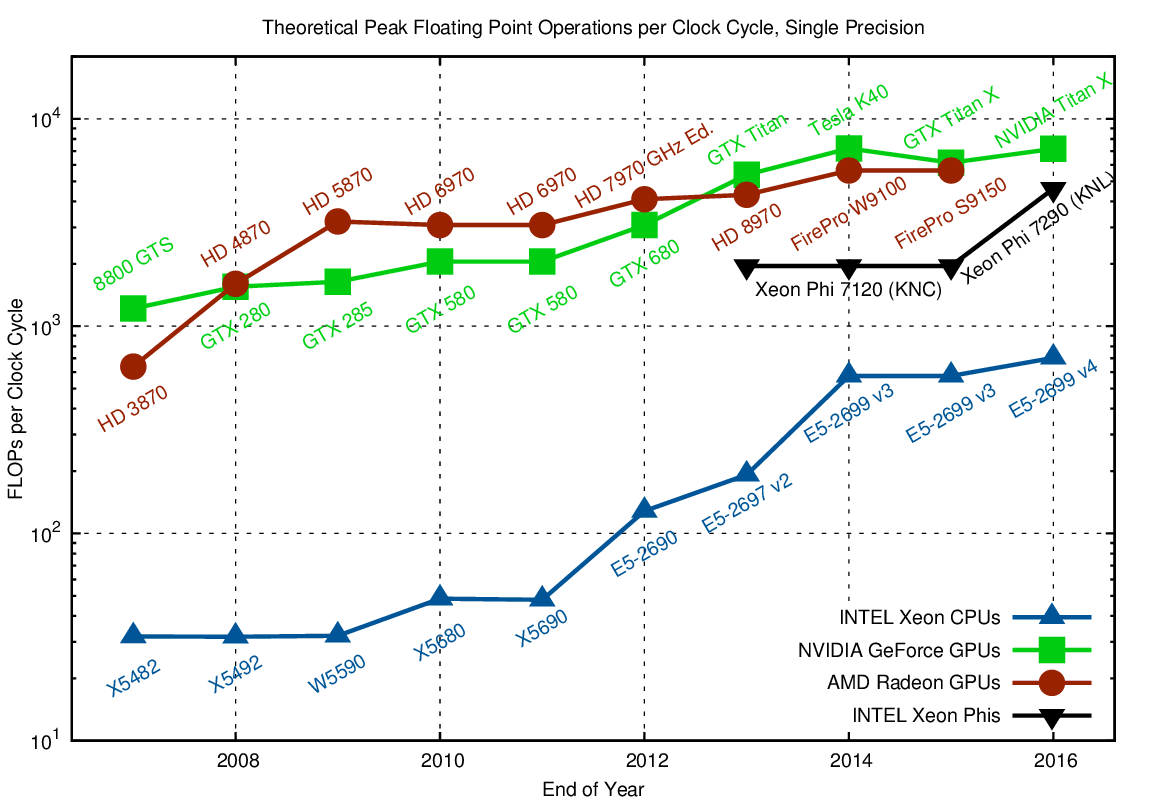

Comparison of CPU and GPU single precision floating point performance... | Download Scientific Diagram

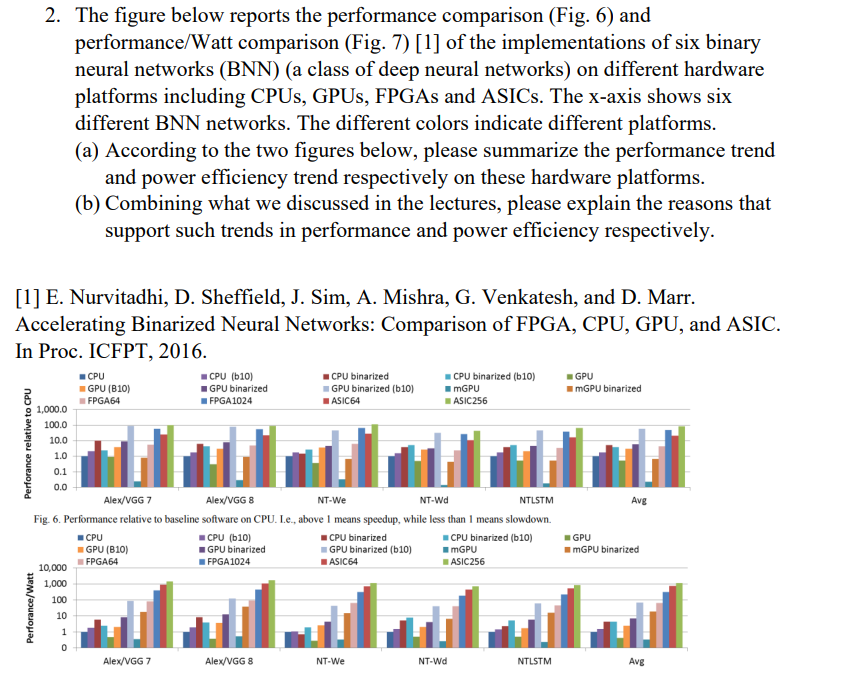

Performance Comparison between CPU, GPU, and FPGA FPGA outperforms both... | Download Scientific Diagram

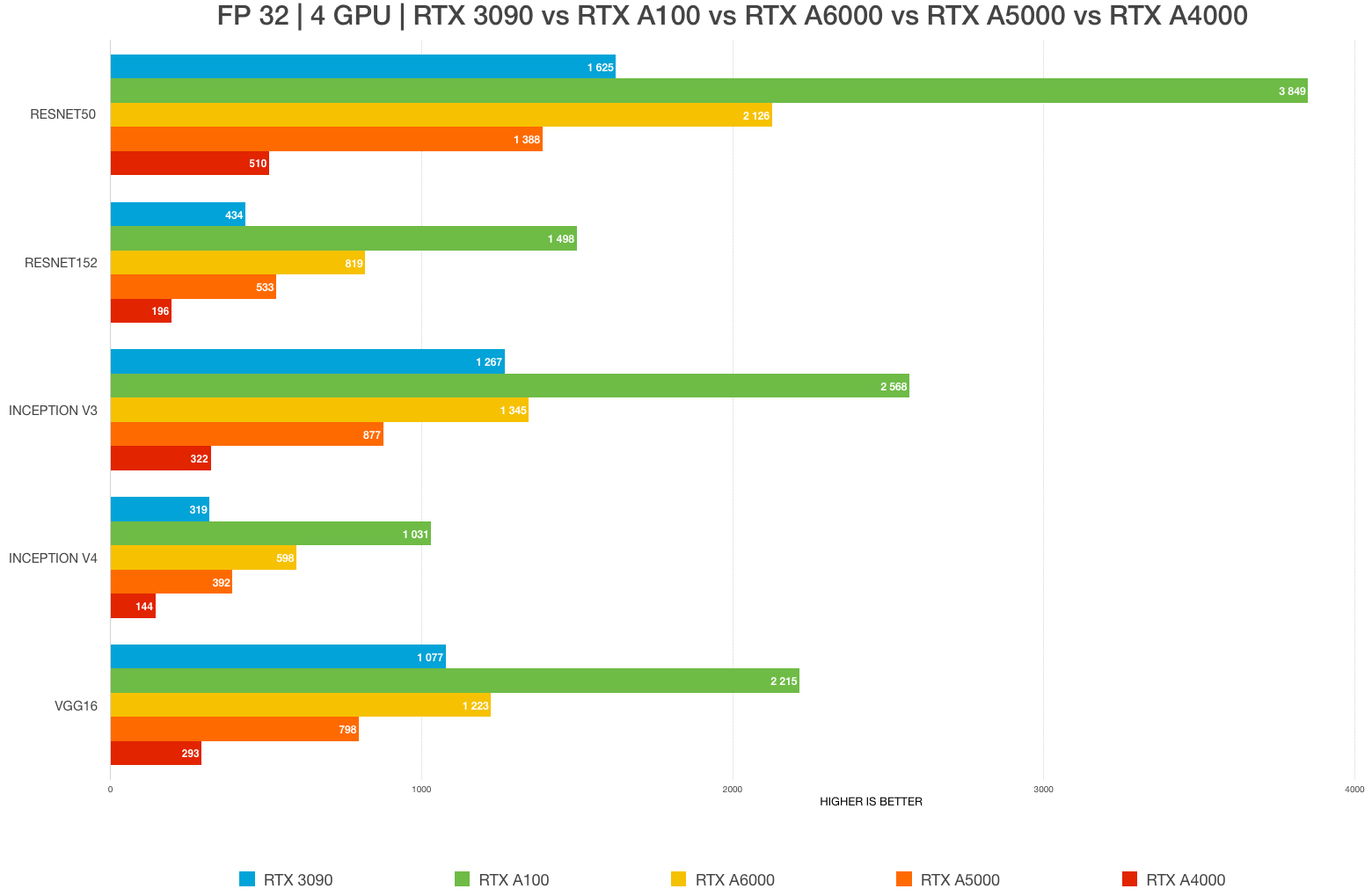

Best GPU for AI/ML, deep learning, data science in 2022–2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON